Recall from our previous post on Hive connections with ORAAH that R commands such as nrow() and dim() trigger map-reduce jobs this is not the case here with our Big Data SQL-enabled external table: > ore.sync(table=c("USER_INFO", "WEBLOGS")) # the second table is EXTERNAL (Big Data SQL) > ore.connect(user="moviedemo", sid="orcl", host="localhost", password="welcome1", port=1521, all=FALSE) Here is a simple ORE script for creating the table USER_INFO from the existing CSV file notice that we don’t need to use a single SQL statement – all the processing is done in ORE with R statements: non-external) database table with the provided user information. Let us remind here that the weblogs data come from a use case which we have described in a previous post to present an equivalent in-database analysis using ORE, we also create a regular (i.e. Adding the user information table in the database When we are satisfied with the table definition, pressing OK will actually create the (external) table in the database we have already defined ( moviedemo in our case). After these changes, we can inspect the DDL statement from the corresponding tab before creating the table: We need also to set the default directory in the “External Table Properties” tab (we choose DEFAULT_DIR here). If necessary, we can alter data types and other table properties here let’s change the DATETIME column to type TIMESTAMP. Selecting “Use in Oracle Big Data SQL…” in the menu shown above, and after answering a pop-up message for choosing the target Oracle database (we choose the existing moviedemo database for simplicity), we get to the “Create Table” screen: Creating an external Big Data SQL-enabled tableĪfter we have connected to Hive in SQL Developer, using an existing Hive table in Big Data SQL is actually as simple and straightforward as a right-click on the respective table (in our case weblogs), as shown in the figure below: Notice only that, if you use a version of SQL Developer later than 4.0.3 (we use 4.1.1 here), there is a good chance that you will not be able to use the Data Modeler in the way it is described in that Oracle blog post, due to what is probably a bug in later versions of SQL Developer (see the issue I have raised in the relevant Oracle forum).

#Oracle sql developer 4.0.3 drivers#

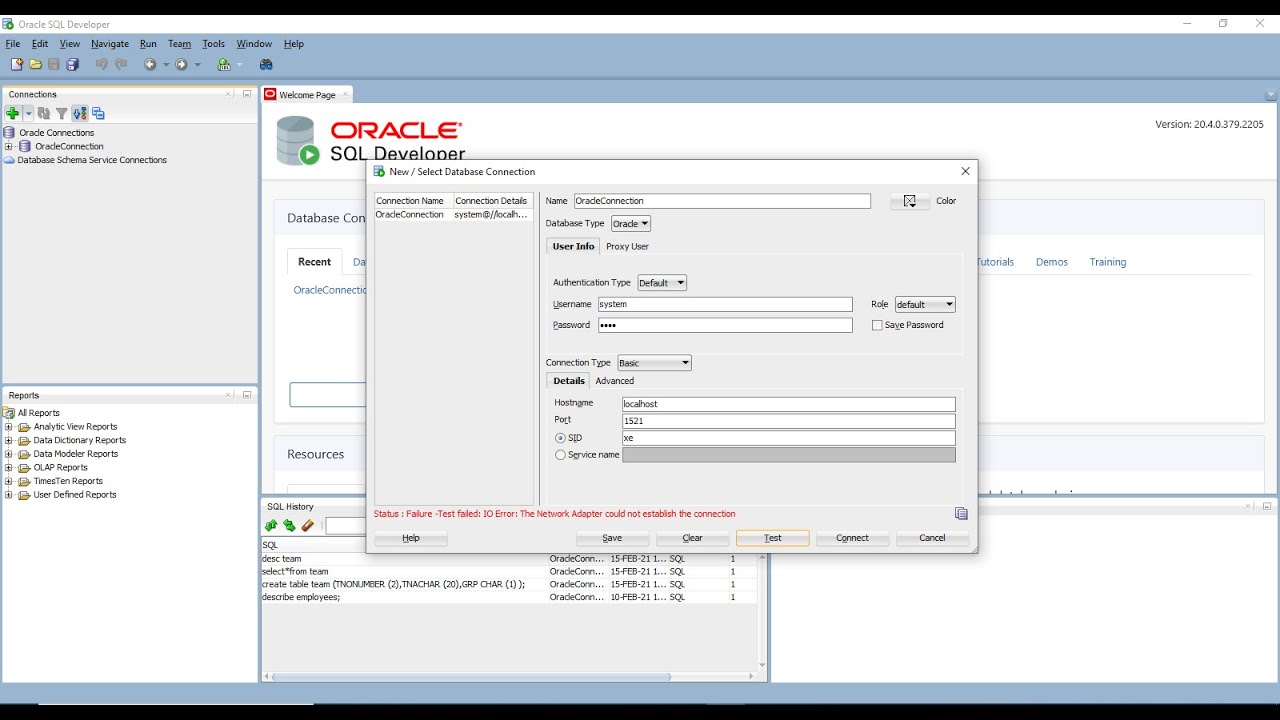

In this post we will provide a step-by-step demonstration, using the weblogs data we have already inserted to Hive in a previous post our configuration is the one included in version 4.2.1 of the Oracle Big Data Lite VM.īefore enabling Big Data SQL tables, we have to download the Cloudera JDBC drivers for Hive and create a Hive connection in SQL Developer we will not go into these details here, as they are adequately covered in a relevant Oracle blog post. I am happy to announce that the answer is an unconditional yes. Oracle Database external tables based on HDFS or Hive data), since I have never seen such a combination mentioned in the relevant Oracle documentation and white papers. I was wondering recently if I could use Oracle R Enterprise (ORE) to query Big Data SQL tables (i.e.

0 kommentar(er)

0 kommentar(er)